MacSlash | Apple Announces Intel Xserve

OK, so I’ve never really developed this site into a “technology pundit’s page” like so many of my friends have (see blogroll), so I’ll point you to some comments I made about the new Xserves from Apple on MacSlash.

I REALLY wish that server makers would get out of this “must be ONE RACK UNIT” rut they are in. To achieve this supposed holy grail of server size they are getting completely absurd in the one dimension nobody talks about… namely depth. To Apple’s credit, they’ve given a center-mount option to the Xserve since day-one, but it still is way too long. The original is 28″ long and this new Intel-CPU’ed Xserve iteration adds another 2″ to that, to now be 30″ long.

I’m sorry folks, that’s beyond absurd. It is ludicrous.

I’ve always maintained that Dell does it to sell their own proprietary cabinets. Apple has no such excuse. I wonder where they’ve added the depth in relation to the center mount area? At the back? In the front? 1″ in both directions? It should make adding a Xeon Xserve a challenge to an already populated rack or cabinet of Xserves!

We use awesome Seismic Zone Four rated cabinets from B-Line, which are adjustable with regards to the mounting rails, but once set, you really don’t want to move them. If you put a server that is 28″ or longer into them the cable management starts getting tough and ends up presenting a real impediment to air flow. With the Dell gear we have to just remove the doors to make it work, which when you think about it, pretty much negates the whole reason for putting a server in a cabinet! The majority of our Xserves are mounted in “open” Chatsworth racks. Those excellent and bullet-proof workhorses on the high-tech world. This removes all the airflow issues, but row density suffers because you have to accommodate the Xserve, the cables, the people space front and back, PLUS the space to fully slide the Xserve chassis open and not interfere with the row of servers in front of it. I realize what I’m about to say is counter-intuitive, but here is some reality for you:

1U servers such as the Apple Xserve actually lower your possible density of installation.

I’ll repeat…

1U servers such as the Apple Xserve actually lower your possible density of installation.

I could have a far more efficient datacenter layout with 2U servers if their form factor was 2U x 18″ x 18″. This would allow me to space my ROWS of racks closer together, and more importantly maximize my electrical power per square foot far more efficiently than with 1U boxes. If you do the math on Apple’s new Xeon Xserve the theoretical maximum electrical draw of a rack full of them is 336 Amps @ 120 Volts. Of course servers rarely run at their maximums, but that is a terrifying number. The “standard” amount of power per-rack in the business these days is 20-60 Amps. Given that it is in reality IMPOSSIBLE to have a rack fully populated with 1U/2PSU boxes due to the cable management nightmare of power cords, and the heat load of putting so much power in so small a space, why bother building 1U boxes? Why add insult to injury by making them as long as an aircraft carrier deck too?

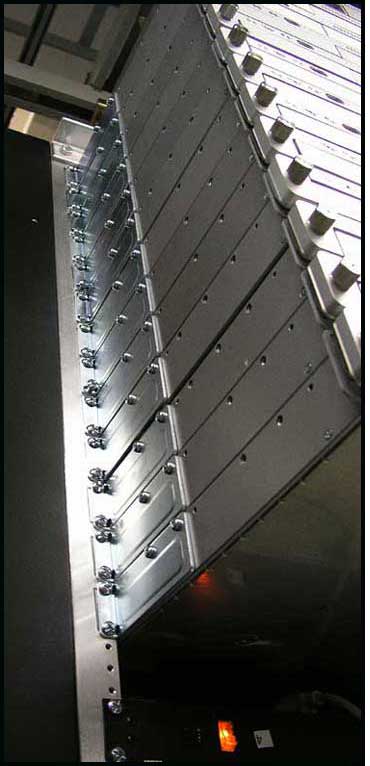

THIS is the ideal size for a server. 2U in height, and rougly 18″ square in the other 2 dimensions. It makes for perfect rack density, row density, and the most efficient use of power (and of course cooling) per square foot of datacenter space. Airflow becomes manageable. Cable management much easier. Storage options more flexible. Heat issues minimized. etc. Do any of the server makers ever visit datacenters? Or do they just assume that 1U is what people want? Do they just listen to trade rags (written by people who sell advertising, not run datacenters!) or do they actually get out in the field and talk to facility operators?

I wonder.

My other beef with the Xserve has been Apple’s complete “slave to fashion” reluctance to put USEFUL ports on the FRONT of the unit. They REALLY need to put the USB and video ports on the front of the Xserve, NOT the back. Why force somebody who has to work at the console (and trust me OS X Server isn’t mature and stable enough to run headless forever… ) to work in the HOT AISLE? The backside of a stack of servers is HOT, and a very uncomfortable place to work. If you put the ports on the front, where the power button and optical drive are located already, there will never be a need to walk all the way around the row of racks and try to remember which server was the one you were working on. Apple actually did a hardware hack (with buttons on one side flashing lights on the other, to fix this design flaw. In reality the only time you really SHOULD be looking at the back of one of these servers is when you are installing it. After that, all admin functions should be performed from the front side of the server.

Again, makes you wonder if Apple actually spent any time in a datacenter or considered any functionality in their design, or was it just meant to look good in a glossy brochure or on a trade show floor?

Plus the Xserve is a pain to rackmount and the case still flexes too much. A 2U server could use L-shaped rails and greatly simplify racking the servers for a single nocturnal geek– right now getting an Xserve in a rack takes two people, whereas even my ancient HP netserver 2u machines are ridiculously easy to set up. But those Xserves sure do look purty, I guess…

We’re using 2U intel 2300’s/2400’s in our datacenters, mounted in 4-post Chatsworth racks with custom cross bracing. Works well for us. We looked at using 1U’s, but given the power density per rack and cooling constraints, the 2U Intels are a better fit for us.

Pooser (The puking presenter) said:

“But those Xserves sure do look purty”

Bingo! From my years of experience working with them, my conclusion is that Apple allowed form to lead function with the design of the Xserve. To Apple, how the Xserve LOOKs is far more important than how well they WORK. They do look slick as hell when crammed into a rack at high density… that is from the front, with NO view of the sides or rear. Note how they are presented in Apple sales materials, or on the show floor at Macworld Expo: well lit shiny front, and the rest hidden in a dark, black, invisible box. The reality is that they stuffed a big fugly UNWORKABLE mess under the carpet.

They could mitigate that by placing a single USB and video port on the front of the box.

Or just simplify life and make it a 2U box at half the depth, or even 75% of the depth.

But no, they are slaves to fashion.

Carl said:

“We looked at using 1U’s, but given the power density per rack and cooling constraints, the 2U Intels are a better fit for us.”

Indeed. Floorspace is all about power density and 1U boxes with dual PSUs just make zero sense in any datacenter larger than a closet.

It’s more than fashion — it’s the IT stigma, and the pricing structure behind it. Most colo facilities charge more for 2U or 4U servers, because it takes up that valuable rack space. They make no mention of depth.

The network geeks in most large datacenters have that same geek porn problem. They value vertical space over anything else, and the manufacturers bow to it.

1U @ ~30″ is less efficient than 2U @ ~18″.

If somebody is charging you more for the latter, they are idiots. Rackspace exists in 3 dimensions.

That said, I think that the server manufacturers are being driven by that at all. I think they don’t even bother to look outside their own labs, and certainly never at any “real world” datacenters.