So yesterday two new clients moved into our facility. I always do my best to make sure that these initial experiences are positive ones. One of the clients was a half-rack and they were just doing preliminary installation of some network gear prior to their eventual move of servers. That one went pretty well.

The other one was a bit more complicated, but certainly within the realm of “simple.” They wanted a full rack, with an adjacent empty rack for future growth. They also needed a 120V/30A electrical circuit. These are becoming fairly standard in full-rack installs due to the electrical requirements of 1U servers. “Standard” electrical for a rack is two 120V/20A circuits. This was fine in the old days but it won’t cut it with 42U of multi-CPU boxen.

We have several racks and cabinets with 30A “twist-lock” circuits, but only one has an adjacent empty rack. In reality it isn’t empty, it has one server in it, but that server is an old, almost retired d.f DNS server, so I figure this is an ideal location for the new client. It is also RIGHT underneath the hot air return of our primary HVAC system… the perfect place to put high-density, heat-creating clients. The HVAC runs much better when it has very hot air going into it.

I make sure that the rack is prepped and ready. Kevin, or facilities manager makes sure the 30A circuit is there and working. I gather up all the tools the client will need: cage nuts, rack screws, screwdrivers, tie-wraps, velcro-wraps, that damn little cage-nut tool to save your fingers from certain damage, a bubble-level, etc. All this is on a cart near their rack. I put the step ladder near their rack. I pre-wire their network connection (with switch-configuration help from Kyle, my awesome network manager.) I even put a trash can next to their rack, charge the battery of the cordless drill, and fetch a cart for them to bring their servers up the elevator. All that’s left for me is to wait for them. What could go wrong?

Plenty it turns out. Of course, they are doing a late-night Friday cut-n-run from their previous provider, so Murphy rears his ugly head.

First, they email me around 4:30 PM and send me a list of what they are bringing. They had requested a 30A twist-lock circuit, but I note the lack of a 30A twist-lock power strip. Usually when a client requests a 30A, they have the gear to handle that sort of circuit. These guys don’t. Of course, this is not the sort of item you can go pick up at Fry’s… or even Graybar. They usually require ordering and a two week wait. These guys be here in a few hours. I tell their sales guys that they don’t have one and we scramble to find one. Kevin calls Graybar, on the off chance they have one… Nope. We call a few other electrical supply places. Nada. We do have a large client… our second largest, who has several racks they JUST purchased, one of which is still empty, that have twin 30A twist-lock power strips. I suggest to the sales team that they ask real nice. This wonderful client agrees to exchange one for a later order. I pull it from their rack and prep it for install. Later, as I’m in our in-the-process-of-relocating build-lab looking for the chargers for a cordless drill’s batteries, and I discover… a 30A twist-lock power strip! Sigh. Typical Friday night install…. grr.

The new client arrives around 11 pm. I greet them, show them where they’re going to install, and hand them off to the Nick on night shift. I start driving home. I didn’t mind waiting until late to drive home as the Friday night commute for me going north is always miserable. They originally stated a 8-9PM arrival time, but were a few hours late. “Even less traffic” I thought! Wrong. The I-5 express lanes were closed by the time I reached them, and they had closed three lanes in Lynnwood for construction, so I waited in an interminable backup at the north end of town. Then, later I’m flying along and pass a Lexus SUV and my Valentine detector goes bonkers with a laser warning. This is the area of I-5 where the WSP heavily enforces the speed limit and I’m getting lasered from behind doing over 25 MPH over the limit… I figure I’m completely screwed.

The detector just doesn’t shut up… keeps going off in that REALLY loud “SLOW DOWN NOW YOU IDIOT” tone that Valentine has chosen for the laser warning. I’m dropping speed as fast as I can and keep looking in my rearview for the flashing light… but instead the Lexus passes me and the alarm stops. After a bit I speed up again, and sure enough, passing the Lexus produces the screeching alarm again! They must have something coming off the front of the SUV that is triggering the laser alarm. I try to put distance between me and the annoying SUV, and of course THEY SPEED up too! I even try the “Diesel smoke screen trick” and that doesn’t help. I drive the last 8 miles of my I-5 route in north Snohomish county with my laser signal going off every few seconds.

As I’m about to exit, my cell phone rings and it is the office. Nick Rycar tells me that the client’s servers won’t fit in the rack we’ve prepared. Whisky. Tango. Foxtrot. ?? !! A 19″ rack is a 19″ rack… this is an industry standard ferrchrissakes… how could they not fit? Nick tells me there is some sort of lip on the vertical posts of the rack and their Dell rails won’t fit on them. I’m thoroughly confused. We’ve had these racks for years and have never had an issue fitting a server into them… ever. He describes the servers as being “too wide” to fit in them.

First off, I hate talking on the phone while driving, so I never am able to think analytically while making forward progress in a motor vehicle. To me driving is a Zen-like activity that consumes all my CPU cycles. It is one of things I really enjoy about driving. So I’m trying to figure out what Rycar is talking about and it just doesn’t compute in my brain. I ask if they are using the cage nuts in a vertical orientation, because I know they allow for a certain amount of horizontal drift off the 19″ width. He doesn’t know what I’m talking about. I tell him that in about 5 minutes I’ll be at home and he can call me on my extension as I’ll be out of cell range.

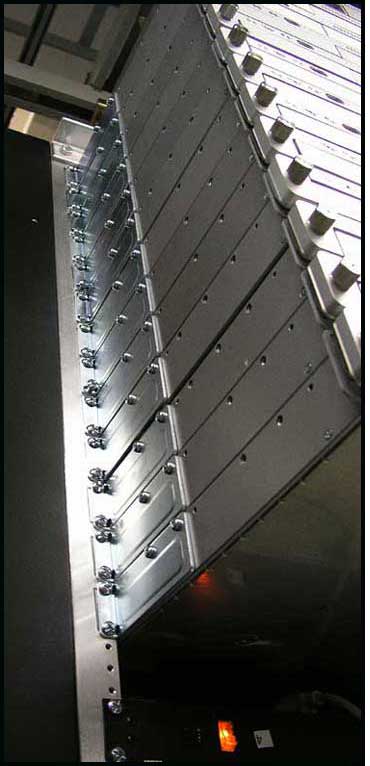

I arrive home and do the stealthy stumble through the dark house in an attempt to not wake anyone up, and wander off to the bedroom where my office is because I know I’ll be getting calls on my IP phone there. Sure enough, I can’t log into it for some dumb reason. I pop open my laptop and thankfully Kyle is online (doing is scheduled maintenance on the BGP routers) and assists me with the IP phone-fu to get myself logged in. I call the datacenter and Nick assures me that they’ve swapped out the vertical rails in the rack with some from another rack that look like they’ll work. (the photo above is from his cell phone, which he shared with Kyle when they were trying to figure out why the Dell rails would not fit in the posts.) Satisfied, I nod off to sleep.

Like driving, sleep is something I’m very good at. I can sleep through anything. I’m lying there.. probably an hour after falling asleep when my IP phone starts ringing. I have no idea how many times poor Nick called my extension and dropped to voice mail before waking me up… but the ringing finally pulled me back to semi-consciousness. I stumble towards my desk, and pick up… just as it goes to voicemail… again. I call the datacenter extension. Rycar answers and says the other vertical posts ALSO don’t fit. He says they’ve given up on getting the server rails mounted and they’re going to try putting them on shelves in the racks. I’m ok with that and likely am asleep before I even get fully horizontal.

About an hour later, who knows… the phone wakes me up again. Nick has the client who wants to talk to me. I’m really not very coherent, but the guy says basically “this isn’t working… we have three choices: find somewhere else to locate here, (I forgot the other… remember it was 3:30 am and I was just awoken), or move back to our old provider.” I don’t recall much else of what was said, but I do recall a phrase… “we are really disappointed in digital.forest”

Ouch. That hurt. Here I’d made sure that everything was just right… and some oddball hardware fitment that issue nobody could have predicted had caught us all unaware. Like I said to Nick earlier on the phone when I was driving home: “A 19″ rack is a 19″ rack. This is an industry standard. It makes no sense that something would not fit.” Of course, now as I’m fully awake and typing this I realize that they had Dell gear, and there was a time period where Dell gear was sold with Dell-specific mounting hardware, designed specifically to fit in Dell-supplied, Dell-labelled, Dell-sold racks. Maybe that is what bit us in the ass? I don’t know, and won’t know until Monday when I can have a look at this stuff myself.

That stinging phrase wakes me up enough to have a moment of clarity and I suggest that there IS another cabinet, one of our 23″ wide ones (that we have modified to be 19″) at the end of row 11 with a 30A electrical circuit (two IIRC) that is available. The only reason I didn’t suggest it to start with is due to the fact that there is not an empty one adjacent to it. The client could care less about their future expansion needs at the moment, as he just wants to get their stuff online right now. They agree to move there. I speak with Nick a bit, and let him know to only move them to an electrical circuit off PDU#1, as PDU#2 is at capacity (PDU#4 will be installed soon!) He checks and sure enough, both circuits are from PDU#1, so I hang up and assume the train should be back on the rails, if you’ll pardon the pun.

I’m awake now… it seems. It is almost 4am, so I flip on the TV, hoping to catch the start of Le Mans on Speed TV. Nope… they are running an infomercial about a drill bit sharpener… sigh. I flip it off, lie down and fall asleep instantly.

Sue comes in around 10 am and wakes me up to tell me she’s off to run some errand or whatnot. I drag my ass out of bed. I’m in that weird state of sleep-shift… almost like jet lag. Disoriented due to waking up so off my usual schedule. I flip on the TV, still on Speed, hoping to catch some Le Mans coverage. They are interviewing some pit technician and showing this NOC-like setup of 20 flat screen monitors showing car telemetry and whatnot. Then I discover this isn’t Le Mans at all… as they pan up to show the skyline of Indianpolis. It is qualifying and the USGP. WTF? There is an actual race going on, I don’t want to see qualifying! Besides, there aren’t even cars on the track at Indy, so they play commercials and teasers for more NASCAR coverage… (yawn) for fifteen minutes! …at the end of which they say Le Mans coverage will begin again at 2pm PDT. Crap. I flip it off… literally and figuratively.

Murphy’s Law indeed.