An old college buddy called me last week. He works for a manufacturer who sells their product into datacenters (among other markets) and he wanted my insight, as a datacenter professional, into “This whole cloud thing”. It seems a colleague of his is trying to convince his whole company to prepare for the cloud computing paradigm shift, where “everything will exist within about 10 huge datacenters.” I have to admit I laughed when I heard that. My friend wanted to know what impact cloud computing will have on the future… “is what this guy saying really going to happen?”

In my answer I went over how much of the thinking and hype surrounding cloud computing is built upon fallacies, while ignoring the market realities. Let me outline those fallacies here:

Fallacy #1. New technology always supersedes old technology.

I wish I had a dollar for every time I heard or read something akin to “Everything will move to the cloud.” The basis of this statement is a deeply-held fallacy in the minds of so many people who follow technology, most of all the “pundits” one reads in the trade rags and blogs. Everything? Really? This fallacy is an extension of an old economic fallacy, that of the limited market. The more gains X makes in a market means that Y & Z lose. For some reason, perhaps the desire of people to sort everyone and everything into piles named ‘winner’ and ‘loser’, they assume that in any given market there can only be one winner and everyone and everything else loses. So all thinking becomes weighted towards the supposed winner. In reality markets are in constant states of shift, and for all practical purposes have no limits. Further if you have a product or service that satisfies a set of customer needs better than the competition, then you will make sales. Not all customers have the same needs, so there is no way that any single technology, service, or business model can exclude all others by its mere presence in the marketplace. Indeed for “everything to move to the cloud” then the cloud has to become the solution to all the needs of all the people. This is impossible. Additionally needs change as conditions and states change. What I need now as I sit at home will be different from what I need sitting at my desk at work tomorrow, or while traveling next week. Cloud delivery assumes pervasive, persistent connectivity, which does not exist, and frankly likely never will. Technological tools should always have some usefulness in stand-alone, disconnected installations, otherwise they are just expensive, not to mention inefficient boat anchors. The new replacing the old seems like the natural order of things, after all we no longer drive horse-drawn buggies right? One could argue that the modern car is the natural evolution of the buggy, with the internal combustion engine merely replacing the horse itself as the prime motivator. The aircraft did not replace the car, despite nearly all pundits in the middle of the 20th century predicting it. (Where are our flying cars anyway?) Nor have many other older technologies vanished; trains and ships still traverse the planet despite much “better” technologies having been developed since their invention. Television hasn’t fully replaced radio or movies. Those markets instead have expanded to allow all these technologies to survive in their own niches. Those niches may expand and shrink over time, but the new technology rarely, if ever completely replaces the old. Bringing it back to information technology, even mainframes are still being built and sold, despite their perception of being technological dinosaurs. Why? Because they serve a need that can not be met by newer technologies. If anything the market for mainframes remains about what it was a decade ago. Sure the market isn’t growing like it was in the 1960s, but it is likely actually larger in terms of physical units operating than it was back then. Cloud computing, even if it is wildly successful will not replace the forms of computing we use today, it will only expand the markets and provide new solutions to some old, but mostly new solutions. That is where technologies really bloom and create markets is when they solve new problems not replace the solutions for old ones.

Fallacy #2. Cloud computing is new technology.

It is not a new technology, just a new name. (See RFC1925, Item 11 & corollary.) At its core “cloud computing” represents no new technology. It is just a buzzword-du-jour being applied to a collection of older technologies being packaged and sold in a new way. I’ve heard the term used to describe everything from Amazon’s EC2 to Skype, from Gmail to Salesforce.com. I find it hard to believe that these all fall into a single definition. Their sole commonality is that they are services delivered over the Internet. That would make my little website here part of The Cloud, and I can tell you it required no recent technological paradigm shift to spring into existence. It seems I’m not the only one with this opinion. If anything I would argue that cloud computing isn’t really about technology at all, but really a way of provisioning and selling computation. Before it was called “cloud computing” it was called various names at various times, as the concept iterated itself though history: Time Sharing, Client/Server, Network Computing, Thin Clients, Utility Computing, Application Service Provider, Grid Computing, Software/Platform/Infrastructure as a Service, etc. None of these terms including “cloud computing” describe any new technology, only ways of delivering or provisioning existing technology. It boils down to rental rather than purchase, period. If I rent you my car I can not claim to have invented the automobile. Or the concept of renting it either.

Fallacy #3. Cloud computing will replace datacenters.

I’ve heard this fallacy from many sources, not just my friend’s colleague’s claim of the future where “everything will exist within about 10 huge datacenters.” Cloud computing represents no threat to the datacenter whatsoever. If anything it will just require MORE datacenters. That answers my friend’s worry, but how did this fallacy originate? Well, datacenters are very expensive. They are very expensive to build and very expensive to operate. As power densities (that is the amount of Watts per square unit of measure available within a given datacenter) go up so do construction costs. The current average cost to build a datacenter in the USA up to modern standards is between $1500 and $3000 per square foot. Compare this to the $150-$200 per square foot cost of the average office building in the USA and you’ll understand why CFO’s tell their CIO/CTO counterparts to rent rather than buy. That is just the building part. Once the construction is complete you have to operate that facility and it costs money too.

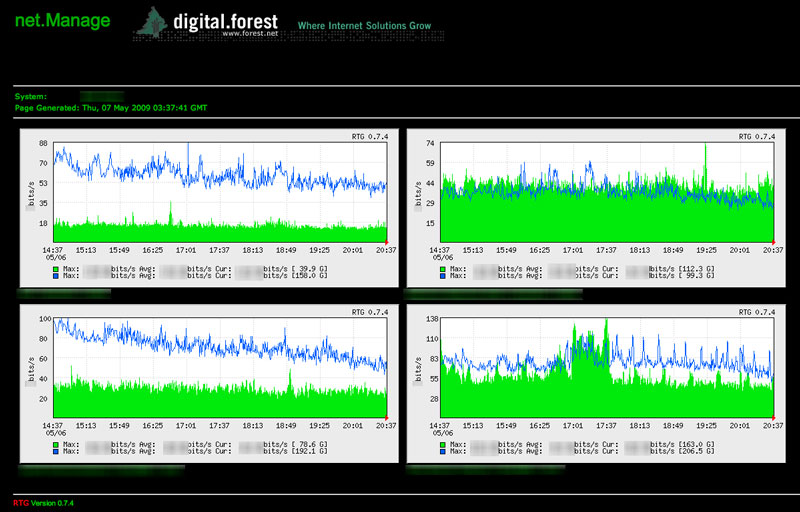

When you boil down what a datacenter does, it is pretty simple. A datacenter is a facility that turns electricity into bits, usually on a grand scale. The by-product of that industrial process, like so many other industrial processes, is heat. Power comes in, usually in vast quantities, and gets burned up by the silicon and rotating discs and transformed into bits, which exit the facility on the wires to be delivered to you, the consumer of bits. This makes heat, which has to be mitigated by mechanical cooling because without cooling the computers will fail faster. This very website resides on a server in a datacenter. This server runs 24 hours a day, and even when people are not reading these bits it burns up energy and makes heat all the day and night. Multiply that by millions, if not billions of servers running in every datacenter around the globe. Now layer on top of that the cooling systems to mitigate the generated heat and you’ll see how operating these facilities is very costly.

Now let’s ice this cake. Moore’s Law has a flipside: the more powerful you make a computer (due to that increase in transistors on silicon) the more power it will consume. Nowhere is this as plain to see and feel than within the datacenter. When a datacenter is built, it usually has a fixed amount of power allocated to it in the form of amps (or if purely AC power, perhaps in VA) which it can not exceed, this is its total electrical capacity. That power is then split, traditionally around 50/50 between “IT Load”, in other words the servers and the UPS gear, and “Mechanical Load” which is dedicated to cooling the IT Load. Watts are what is consumed by facility as it runs. By analogy, if you think about your car the amps/VA/capacity is the limits of the engine’s power, while Watts are the gasoline the car consumes over its lifetime. So building a datacenter is like buying the car, and the operating costs are what consumables it uses over its lifetime. Like automobiles, datacenters have seen increases in capacity and power over the last several decades. In the 1960s the datacenter was a large room with a few, or maybe just one large computer. The advent of the mini/micro/personal computers has transformed the datacenter. Now they are large buildings with thousands, if not tens of thousands of computers in them. The electrical capacities and densities of datacenters has risen exponentially as well. A decade ago 90 Watts per square foot was a high-end facility, now nobody would bother building such a datacenter. 300-600 Watts per square foot is common today, and higher densities are planned or even already complete. The tighter you pack the servers the hotter it gets inside the datacenter. Computers have become consumables themselves, and some are now building datacenters with minimal cooling, assuming that heat-related failure is just the signal that it is time to replace the server anyway. The building and the electricity are the true expenses and the computers are cheap commodities, just there to be used until they fail. Turning decades of IT thinking on its head! Maybe Dilbert was wrong?

Datacenters may be changing, but cloud computing doesn’t change datacenters or their economics. Cloud computing providers still have to build and run datacenters (or rent the space from colocation providers.) Those require capital expenditure. In order to pencil out economically, the cloud provider has to either charge their customers enough to pay for the build in a reasonable amount of time and cover the monthly operating costs, or oversell their capacity and hope it doesn’t bite them in the ass. This is why I’ve said the only reasonable current cloud provider business model is Amazon’s, which is based on excess capacity. Essentially the cloud customers contribute to Amazon’s datacenter ROI while they scale their own operations. It is a brilliant model. But anyone who is starting out as a stand-alone cloud provider faces a rough road to profitability.

All the world’s computing needs can not be collapsed into those “ten huge datacenters” my buddy heard about. The reality is that as industry, business, and society use more and more information technology there will be more and more datacenters. They will range is scale from re-purposed broom closets to giant campuses of warehouse-sized facilities. Many organizations have very specific needs that cloud computing may never be able to address, and for them there always has to be the choice of a traditional facilty…

Fallacy #4. Cloud computing can work for any IT need.

This is more of an inference to the “everything will move to the cloud” statement I hear so often. There are several IT needs that can not be solved with cloud computing. Meeting audit requirements is one. I’ve written about this fallacy before, and it caused a bit of an uproar. It seemed to be the first time anyone brought this issue up, and it became a hot topic in the cloud blogosphere for a short time. I felt vindicated when a cloud provider admitted what I said was true.

The basis of cloud computing is the same basis as web hosting: your data on somebody else’s servers. The same reasons that people chose to not use a web host apply to a cloud provider. Control of assets. Risks associated with overselling capacity. Support concerns. Interoperability concerns. There are literally hundreds, if not thousands of reasons why IT organizations and individuals would prefer to keep their data out of a cloud computing system. Most resolve to a single word, which is trust. That brings us to our last fallacy…

Fallacy #5. The cloud is secure.

Cloud computing is no more secure than any other form of computing, which is to say, not very. Or perhaps more accurately, as secure as it is designed, and managed to be. For an excellent analysis of data security in a cloud environment I highly suggest a read of Rich Mogull’s thoughts on the subject. Rich obsesses about all things security and does a far better job than I could in delving into the specifics. To his analysis however I’ll add a more encompassing, and less data-specific view of security that is more about trust and consequences than that of the integrity of the data itself.

The greatest hurdle to the widespread acceptance of cloud computing is trust. Trusting one’s data to systems whose location, condition, environment, and state of load are virtually unknown is a difficult thing to do. Many of these questions apply to any services (hosting, colocation, SaaS, etc) purchased online but the “cloudy” nature of cloud computing amplifies many of them beyond simple answers found in other scenarios: How well is that data protected? How stable is the company that owns the infrastructure? Is the datacenter owned and maintained by the cloud provider, or is it colocated is some other company’s facility? If the latter is the cloud provider keeping current on all it’s bills, or is their installation subject to suspension by their colo provider? What about bandwidth? Is the cloud provider multi-homed? Do they have geographic redundancy? What happens when the power goes out? Have they tested their generator(s)? How well are their power backup, network, and HVAC systems maintained? Is there anyone on-site if something goes wrong? What sort of SLAs do they have? What happens to our data if the cloud provider goes out of business? What sort of security is in place to monitor their customers? What happens if somebody else on the system(s) we’re using is a spammer? How do they handle blacklisting? Could AUP violations by other customers impact our operations? Are assigned IPs SWIPed to customers, or does everything track back to the cloud provider? What happens to our data when we scale back usage, or cancel our service? I could go on and on.

Many of these issues resolve to how much can you trust your cloud provider. Trust takes a long time to build. Most of IT is fairly critical corporate data and infrastructure, so it may be some time before trust is built up enough to move much of this sort of data to cloud deployments. Trust can also evaporate almost instantly once it is lost, so all it will take is a single high-profile cloud-related failure to put all cloud business at risk.

Now it may seem that I’m somehow “anti-cloud”. Nothing could be farther from the truth. It is a sensible method for provisioning computing resources on demand, and fulfills a very real market niche. I just do not believe that it is the answer to every IT problem, nor is it the future of IT, only small portion of it. Cloud computing will expand the market. I can envision a very near future where companies use a hybrid of traditional dedicated datacenter resources with cloud deployments to extend, replicate, or expand as demand warrants. The cloud is indeed a new paradigm, but it lacks the underlying “shift” that alters the entire industry around it. The pundits should sheath their hyperbole and focus on what cloud computing can do for people, rather than what it will do to the marketplace.