After years of decline, data centers are back

Finally. The press has caught onto our (pardon the pun) current reality. *

They are, as per usual in the press, wrong on the details. The industry has never been in “decline”, but it is in good shape at the moment.

Power is the name of the game, and we actually have it. Internap, at least in Seattle, does not. We are at 10% of our available capacity and we have the right amount of floor space to handle a fairly dense install. InterNap in contrast limits their customers at Fisher Plaza to half racks by limiting their power.

Back in the bad days of 2001-2003, we saw a small burst of growth as colo facilities in the Seattle market started closing. Exodus, Colo.com, Verio, Level 3, Qwest, Zama, etc were shutting down unprofitable datacenters. Some just vanished, others relocated their customers to California, or Colorado. We were able to pick up a lot of new business in colocation at the time, ironically because we were a small, local company with almost a decade of history… unlike a large, non-local company, which was brand new and burning a pile of VC cash. Suddenly the desire was for businesses doing the new-economy in an old-fashioned way – with revenue from customers, not investment from venture capital funds.

The one company that consistently won the larger business (multi-rack installs) away from us was InterNap. Prior to the new economy meltdown of 2001, digital.forest was a value-priced colocation facility. We were priced at a sustainable level, but still much lower than Exodus, or similar facilities. We had to be as we were small, and our facility was at that time, very second-tier. InterNap in contrast was in a brand-new, state-of-the-art facility in Seattle’s Fisher Plaza. What compounded the problem for us was that InterNap was losing money hand over fist, but were buoyed by cash from a pre-crash IPO. They had a huge facility to fill, and were practically giving it away. Their prices were unsustainable… insanely low. A full 50% below our pre-crash prices, sometimes even lower. We didn’t have a mountain of cash to burn, nor did we have thousands of square feet of empty datacenter, so we couldn’t match those prices and lost virtually every one of the larger bids we put out to InterNap.

A few years later, we moved into one of those “state-of-the-art” colocation facilities left behind by a failing “dotcom” and suddenly we find ourselves in a facility equal, if not better than, InterNap’s at Fisher Plaza. I’d argue the latter position as we have a landlord (Sabey) that truly understands our business model (they built most of these facilities for the now-mostly-gone Colo providers) and agreed to let us manage our own facility infrastructure. We maintain the HVAC and backup power systems directly, rather than second-hand via the landlord, as with Fisher. So as the datacenter economic landscape has been improving over the last few years** prices have once again started to rise and are just now reaching sustainable levels again. But, just as InterNap are going back to those clients they gave away space to back in the day to tell them about higher prices, they also can not provide them with any more power. I said it back in 2002, and I’ll say it again now: “I encourage my competitors to operate this way.”

Today’s savvy colocation customer expects to pay market rate for rack- or floor-space, but they also expect to have custom power solutions delivered to their racks. The industry standard of two 20 amp circuits per rack went out with the Pentium III. Until the computer hardware and chip industry can get their act together and get power consumption under control, today’s racks require 60 amps, or MORE.

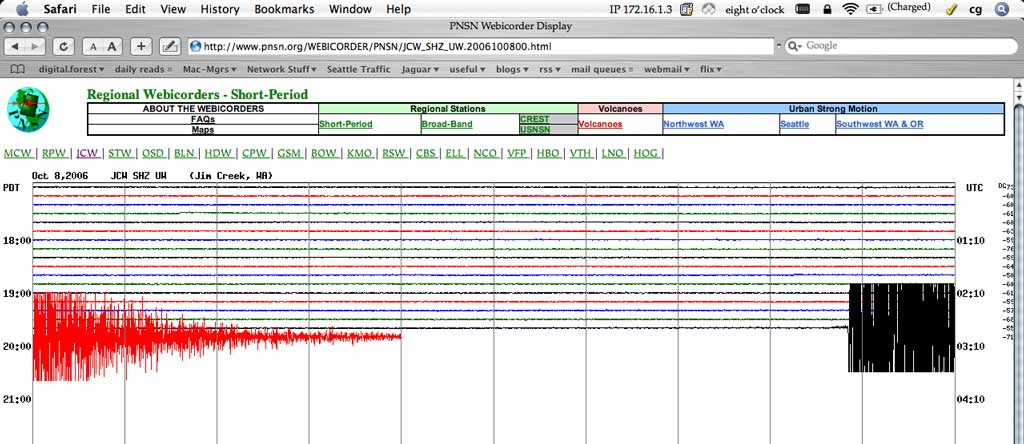

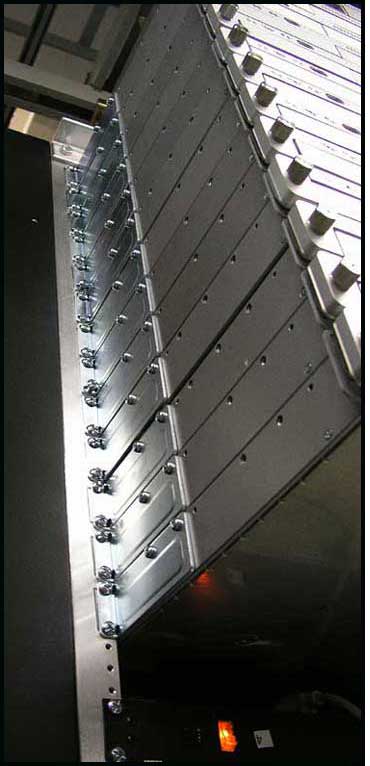

Above: near maximum density before going to blade servers. A digital.forest client’s installation of 1u & 2u multi-cpu servers, plus 3U disk arrays of storage. This client has been growing at a “rack per quarter” rate for the past year. They’ll be moving into a cage in our new expanded space around the holidays.

We are finally in the position to turn the table on InterNap, as we have space, and more importantly, power & cooling capacity to spare, right as the market heat is approaching “boil”. For once, we are sitting on the right side of the supply/demand curve.

* I’m linking to the story in the PI, mostly because it is a local paper about a local company (well, USED to be local) in our industry. I first read it online in the New York Times, but they have that massively annoying “free registration system” and I don’t know how many of my readers are savvy enough to get through that with “bugmenot”… thankfully the Seattle PI picked it up.

** I’d say that the datacenter economy never really went down. If anything the growth of it has been a rock-steady linear graph since our beginnings in 1994. The only odd year was 1999, when it experienced a doubling, but every other year has seen roughly 50% growth, even the “bad” years of 2001-2002. What happened was that between 1998 and 2001 the industry overbuilt capacity. Everybody was investing in datacenters and as a result a classic over-supply, under-demand situation arose that artificially depressed the datacenter industry.